这是一篇跟着官方教程走的深入文章,官方教程有6篇。现在这篇是 nuscenes_tutorial.ipynb,也就是第一篇,链接:here

6篇教程分别如下:

- nuscenes_tutorial.ipynb

- nuscenes_lidarseg_panoptic_tutorial.ipynb

- nuimages_tutorial.ipynb

- can_bus_tutorial.ipynb

- map_expansion_tutorial.ipynb

- prediction_tutorial.ipynb

背景

nuScenes数据集

- 发布方:无人驾驶技术公司Motional

- 下载地址:https://scale.com/open-datasets/nuscenes/tutorial

- 论文地址:https://arxiv.org/abs/1903.11027

- 发布时间:2019

- 大小:547.98GB

- 简介:nuScenes 数据集是自动驾驶领域使用最广泛的公开数据集之一,也是目前最权威的自动驾驶纯视觉 3D 目标检测评测集。nuScenes数据集灵感来源于kitti,是首个包含全传感器套件的数据集。其中包含波士顿和新加坡的 1000 个复杂的驾驶场景。该数据集禁止商用

特征

- 全传感器套件:1个激光雷达、5个雷达、6个摄像头、GPS、IMU

- 1000个场景,每个场景20秒(850个用于模型训练,150个用于模型测试)

- 40万个关键帧,140万张相机图片,39万个激光雷达扫描点云图像,140 万个雷达扫描点云图像

- 为23个对象类标注的1400万个3D标注框

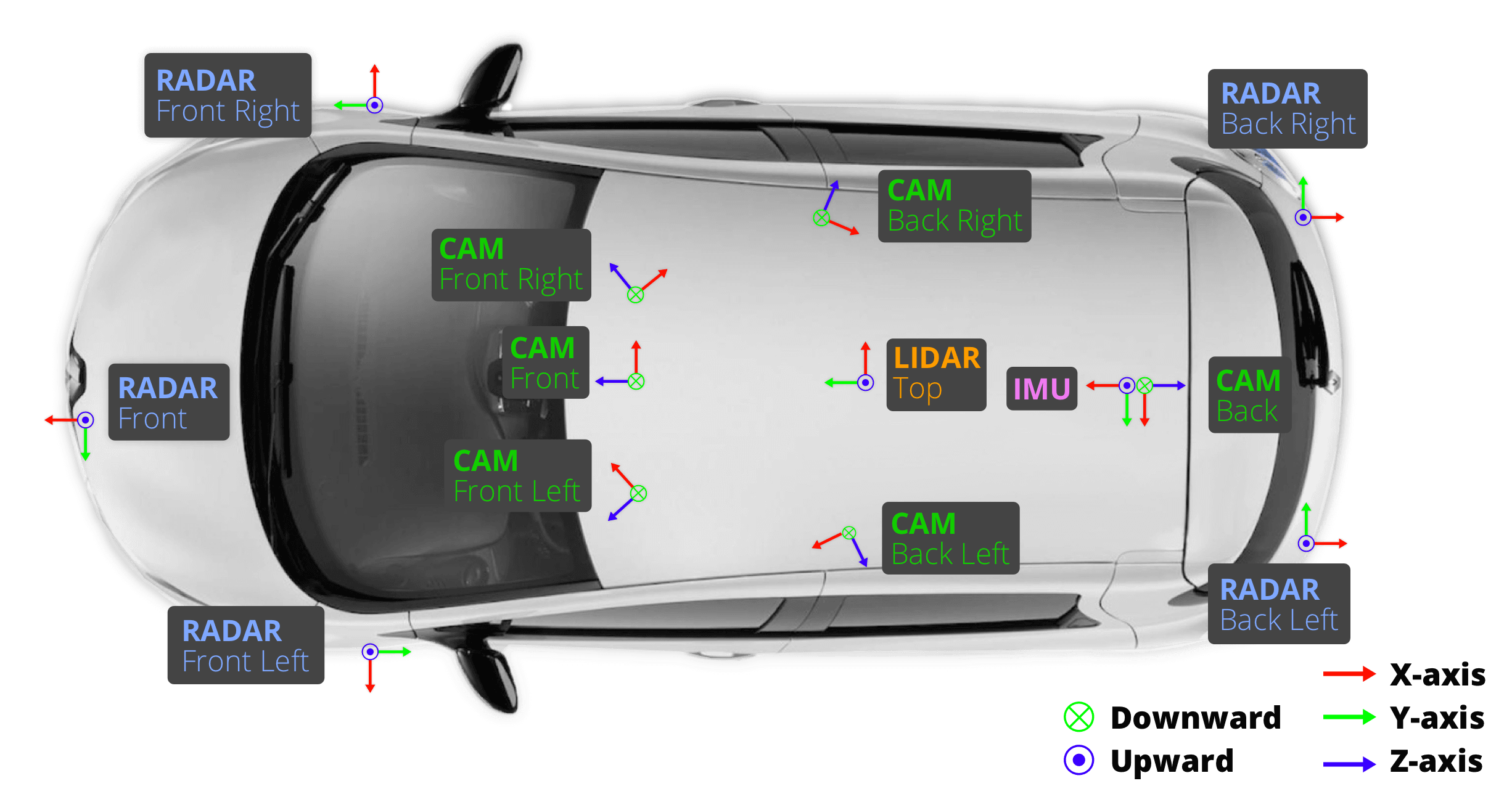

传感器在采集车上的布置如下图所示:

- 相机(CAM)有六个,分别分布在前方(Front)、右前方(Front Right)、左前方(Front Left)、后方(Back)、右后方(Back Right)、左后方(Back Left);

- 激光雷达(LIDAR)有1个,放置在车顶(TOP);

- 毫米波雷达有五个,分别放置在前方(Front)、右前方(Front Right)、左前方(Front Left)、右后方(Back Right)、左后方(Back Left)。

准备工作

下载数据集

需要先注册,邮箱注册即可,然后进入下载页:https://www.nuscenes.org/download,找到 Full dataset (v1.0),md5: 791dd9ced556cfa1b425682f177b5d9b,点击 Asia,即可下载,因为是官方是使用了 aws s3 进行存储,如果遇到下载不畅,请自备魔法。

这个 mini dataset,提供了10个场景供大家去探索数据,足够了,不需要下载 whole dataset。

安装devkit

我这边使用 Anaconda,直接启动 jupyter,所以不需要走安装流程

当然也可以查看官方devkit安装教程,链接:here

查看数据集

将下载好的数据集解压到~/data_sets,完整的目录是/Users/lau/data_sets/v1.0-mini,这个目录后面会用到。

压缩包的内容如下:

ll -a

total 24

drwx------@ 8 lau staff 256B 5 25 22:14 .

drwxr-xr-x 4 lau staff 128B 5 25 22:25 ..

-rw-rw-r--@ 1 lau staff 133B 3 23 2019 .v1.0-mini.txt

drwxr-xr-x@ 6 lau staff 192B 3 23 2019 maps

drwxrwxr-x@ 14 lau staff 448B 3 18 2019 samples

drwxrwxr-x@ 14 lau staff 448B 3 18 2019 sweeps

drwxr-xr-x@ 15 lau staff 480B 3 23 2019 v1.0-mini打开map文件夹,可以看到4个地图的图片。

打开samples文件夹,出现了下面的目录结构,就是上面提到的传感器(6个相机、1个激光雷达、5个毫米波雷达)所采集到的信息

ls -1 samples

CAM_BACK

CAM_BACK_LEFT

CAM_BACK_RIGHT

CAM_FRONT

CAM_FRONT_LEFT

CAM_FRONT_RIGHT

LIDAR_TOP

RADAR_BACK_LEFT

RADAR_BACK_RIGHT

RADAR_FRONT

RADAR_FRONT_LEFT

RADAR_FRONT_RIGHT打开sweeps文件夹,我们会发现其结构和samples文件夹是完全一样的。samples文件夹中存储的信息是较为关键、重要的,而sweeps文件夹中的信息则相对次要。

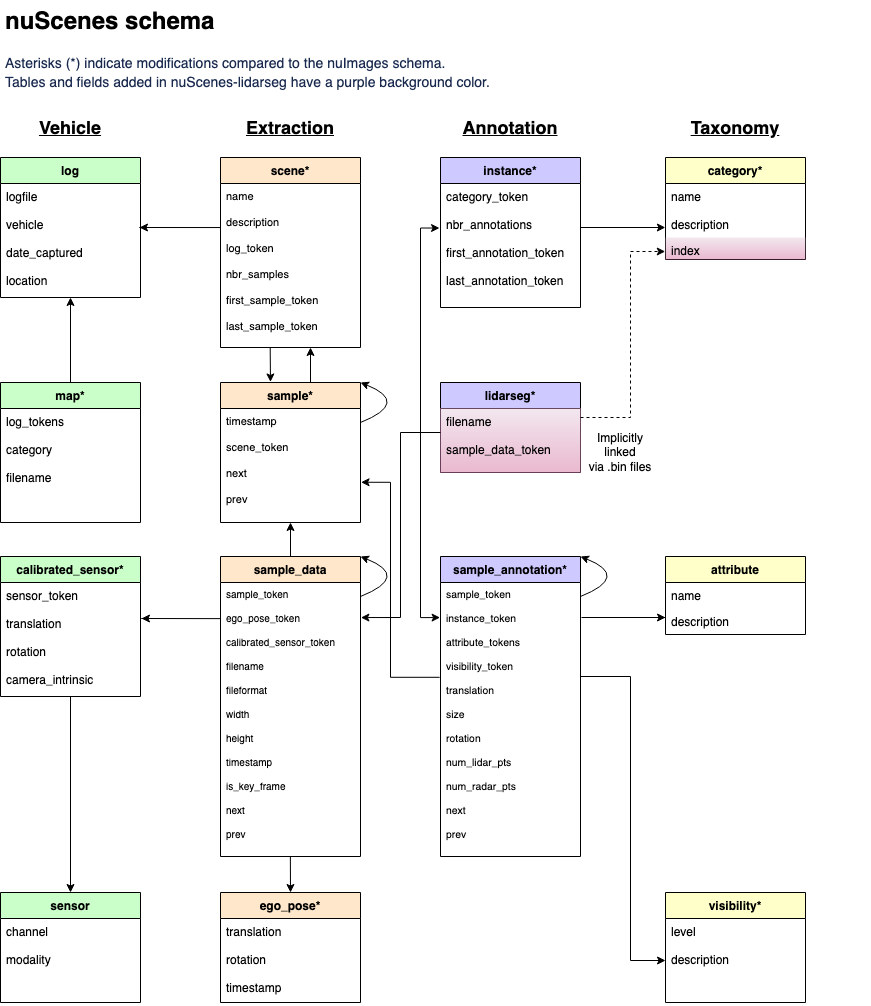

打开v1.0-mini文件夹,里面是一堆json格式的文件。这些文件描述了nuScenes中使用的数据库模式。所有的注释和元数据(包括校准、地图、车辆坐标等)都存储在一个关系型数据库中。下面列出了数据库表格。每一行都可以通过其唯一的主键标记进行识别。外键(例如sample_token)可用于连接到sample表的标记。

ls -1 v1.0-mini

attribute.json

calibrated_sensor.json

category.json

ego_pose.json

instance.json

log.json

map.json

sample.json

sample_annotation.json

sample_data.json

scene.json

sensor.json

visibility.json

详细可以看:https://github.com/nutonomy/nuscenes-devkit/blob/master/docs/schema_nuscenes.md

阅读数据集

准备

启动 jupyter,创建nuscenes-devkit.ipynb

安装库

pip install nuscenes-devkit导入相关模块和数据集

%matplotlib inline

from nuscenes.nuscenes import NuScenes

nusc = NuScenes(version='v1.0-mini', dataroot='/Users/lau/data_sets/v1.0-mini', verbose=True)执行后,出现下面信息:

======

Loading NuScenes tables for version v1.0-mini...

23 category,

8 attribute,

4 visibility,

911 instance,

12 sensor,

120 calibrated_sensor,

31206 ego_pose,

8 log,

10 scene,

404 sample,

31206 sample_data,

18538 sample_annotation,

4 map,

Done loading in 0.794 seconds.

======

Reverse indexing ...

Done reverse indexing in 0.1 seconds.

======中文解释如下:

1.日志(log)- 提取数据的日志信息。

2.场景(scene)- 汽车行驶的20秒片段。

3.样本(sample)- 特定时间戳下场景的注释快照。

4.样本数据(sample_data)- 从特定传感器收集的数据。

5.车辆姿态(ego_pose)- 特定时间戳下车辆的姿态。

6.传感器(sensor)- 特定传感器类型。

7.校准传感器(calibrated sensor)- 定义在特定车辆上校准的特定传感器。

8.实例(instance)- 我们观察到的所有对象实例的枚举。

9.类别(category)- 对象类别的分类法(例如车辆、人类)。

11.属性(attribute)- 实例的属性,在类别保持不变的情况下可以改变。

12.可见度(visibility)- 从6个不同摄像机收集的所有图像中可见的像素分数。

13.样本注释(sample_annotation)- 我们感兴趣的对象的注释实例。

14.地图(map)- 以二进制语义掩码的形式存储的地图数据,以俯视图呈现。

场景(scene)

nusc.list_scenes()

# 输出结果

# 每行的意思分别是场景名称、场景中的景物描述、采集时间、场景持续时间、采集地点、场景注释数量

scene-0061, Parked truck, construction, intersectio... [18-07-24 03:28:47] 19s, singapore-onenorth, #anns:4622

scene-0103, Many peds right, wait for turning car, ... [18-08-01 19:26:43] 19s, boston-seaport, #anns:2046

scene-0655, Parking lot, parked cars, jaywalker, be... [18-08-27 15:51:32] 20s, boston-seaport, #anns:2332

scene-0553, Wait at intersection, bicycle, large tr... [18-08-28 20:48:16] 20s, boston-seaport, #anns:1950

scene-0757, Arrive at busy intersection, bus, wait ... [18-08-30 19:25:08] 20s, boston-seaport, #anns:592

scene-0796, Scooter, peds on sidewalk, bus, cars, t... [18-10-02 02:52:24] 20s, singapore-queensto, #anns:708

scene-0916, Parking lot, bicycle rack, parked bicyc... [18-10-08 07:37:13] 20s, singapore-queensto, #anns:2387

scene-1077, Night, big street, bus stop, high speed... [18-11-21 11:39:27] 20s, singapore-hollandv, #anns:890

scene-1094, Night, after rain, many peds, PMD, ped ... [18-11-21 11:47:27] 19s, singapore-hollandv, #anns:1762

scene-1100, Night, peds in sidewalk, peds cross cro... [18-11-21 11:49:47] 19s, singapore-hollandv, #anns:935my_scene = nusc.scene[0]

my_scene

# 输出结果

# 可以通过 token 获取对应的信息

{'token': 'cc8c0bf57f984915a77078b10eb33198',

'log_token': '7e25a2c8ea1f41c5b0da1e69ecfa71a2',

'nbr_samples': 39,

'first_sample_token': 'ca9a282c9e77460f8360f564131a8af5',

'last_sample_token': 'ed5fc18c31904f96a8f0dbb99ff069c0',

'name': 'scene-0061',

'description': 'Parked truck, construction, intersection, turn left, following a van'}样本(sample)

先来说说sample和scene的关系,前面说到,每个scene大约持续20s,那sample就是每0.5秒进行一次采样。也可以这样理解sample和scene,sence相当于20s的视频,sample就是每0.5s取一帧的图像。

first_sample_token = my_scene['first_sample_token'] #获取第一个sample的token值

first_sample_token

# 输出结果

'ca9a282c9e77460f8360f564131a8af5'my_sample = nusc.get('sample', first_sample_token)

my_sample

# 输出结果

# 结果中包含了传感器采集到的信息、注释信息等等

{'token': 'ca9a282c9e77460f8360f564131a8af5',

'timestamp': 1532402927647951,

'prev': '',

'next': '39586f9d59004284a7114a68825e8eec',

'scene_token': 'cc8c0bf57f984915a77078b10eb33198',

'data': {'RADAR_FRONT': '37091c75b9704e0daa829ba56dfa0906',

'RADAR_FRONT_LEFT': '11946c1461d14016a322916157da3c7d',

'RADAR_FRONT_RIGHT': '491209956ee3435a9ec173dad3aaf58b',

'RADAR_BACK_LEFT': '312aa38d0e3e4f01b3124c523e6f9776',

'RADAR_BACK_RIGHT': '07b30d5eb6104e79be58eadf94382bc1',

'LIDAR_TOP': '9d9bf11fb0e144c8b446d54a8a00184f',

'CAM_FRONT': 'e3d495d4ac534d54b321f50006683844',

'CAM_FRONT_RIGHT': 'aac7867ebf4f446395d29fbd60b63b3b',

'CAM_BACK_RIGHT': '79dbb4460a6b40f49f9c150cb118247e',

'CAM_BACK': '03bea5763f0f4722933508d5999c5fd8',

'CAM_BACK_LEFT': '43893a033f9c46d4a51b5e08a67a1eb7',

'CAM_FRONT_LEFT': 'fe5422747a7d4268a4b07fc396707b23'},

'anns': ['ef63a697930c4b20a6b9791f423351da',

'6b89da9bf1f84fd6a5fbe1c3b236f809',

...

'2bfcc693ae9946daba1d9f2724478fd4']}nusc.list_sample(my_sample['token'])

# 输出结果

# 列出所有 sample_data 关键帧和样本相关的 sample_annotation

Sample: ca9a282c9e77460f8360f564131a8af5

sample_data_token: 37091c75b9704e0daa829ba56dfa0906, mod: radar, channel: RADAR_FRONT

sample_data_token: 11946c1461d14016a322916157da3c7d, mod: radar, channel: RADAR_FRONT_LEFT

sample_data_token: 491209956ee3435a9ec173dad3aaf58b, mod: radar, channel: RADAR_FRONT_RIGHT

sample_data_token: 312aa38d0e3e4f01b3124c523e6f9776, mod: radar, channel: RADAR_BACK_LEFT

sample_data_token: 07b30d5eb6104e79be58eadf94382bc1, mod: radar, channel: RADAR_BACK_RIGHT

sample_data_token: 9d9bf11fb0e144c8b446d54a8a00184f, mod: lidar, channel: LIDAR_TOP

sample_data_token: e3d495d4ac534d54b321f50006683844, mod: camera, channel: CAM_FRONT

sample_data_token: aac7867ebf4f446395d29fbd60b63b3b, mod: camera, channel: CAM_FRONT_RIGHT

sample_data_token: 79dbb4460a6b40f49f9c150cb118247e, mod: camera, channel: CAM_BACK_RIGHT

sample_data_token: 03bea5763f0f4722933508d5999c5fd8, mod: camera, channel: CAM_BACK

sample_data_token: 43893a033f9c46d4a51b5e08a67a1eb7, mod: camera, channel: CAM_BACK_LEFT

sample_data_token: fe5422747a7d4268a4b07fc396707b23, mod: camera, channel: CAM_FRONT_LEFT

sample_annotation_token: ef63a697930c4b20a6b9791f423351da, category: human.pedestrian.adult

...

sample_annotation_token: 2bfcc693ae9946daba1d9f2724478fd4, category: movable_object.barrier样本数据(sample_data)

获取样本数据

my_sample['data']

# 输出结果

{'RADAR_FRONT': '37091c75b9704e0daa829ba56dfa0906',

'RADAR_FRONT_LEFT': '11946c1461d14016a322916157da3c7d',

'RADAR_FRONT_RIGHT': '491209956ee3435a9ec173dad3aaf58b',

'RADAR_BACK_LEFT': '312aa38d0e3e4f01b3124c523e6f9776',

'RADAR_BACK_RIGHT': '07b30d5eb6104e79be58eadf94382bc1',

'LIDAR_TOP': '9d9bf11fb0e144c8b446d54a8a00184f',

'CAM_FRONT': 'e3d495d4ac534d54b321f50006683844',

'CAM_FRONT_RIGHT': 'aac7867ebf4f446395d29fbd60b63b3b',

'CAM_BACK_RIGHT': '79dbb4460a6b40f49f9c150cb118247e',

'CAM_BACK': '03bea5763f0f4722933508d5999c5fd8',

'CAM_BACK_LEFT': '43893a033f9c46d4a51b5e08a67a1eb7',

'CAM_FRONT_LEFT': 'fe5422747a7d4268a4b07fc396707b23'}获取 LIDAR_TOP 的元数据

sensor = 'LIDAR_TOP'

lidar_top = nusc.get('sample_data', my_sample['data'][sensor])

lidar_top

# 输出结果

{'token': '9d9bf11fb0e144c8b446d54a8a00184f',

'sample_token': 'ca9a282c9e77460f8360f564131a8af5',

'ego_pose_token': '9d9bf11fb0e144c8b446d54a8a00184f',

'calibrated_sensor_token': 'a183049901c24361a6b0b11b8013137c',

'timestamp': 1532402927647951,

'fileformat': 'pcd',

'is_key_frame': True,

'height': 0,

'width': 0,

'filename': 'samples/LIDAR_TOP/n015-2018-07-24-11-22-45+0800__LIDAR_TOP__1532402927647951.pcd.bin',

'prev': '',

'next': '0cedf1d2d652468d92d23491136b5d15',

'sensor_modality': 'lidar',

'channel': 'LIDAR_TOP'}可视化展示数据:

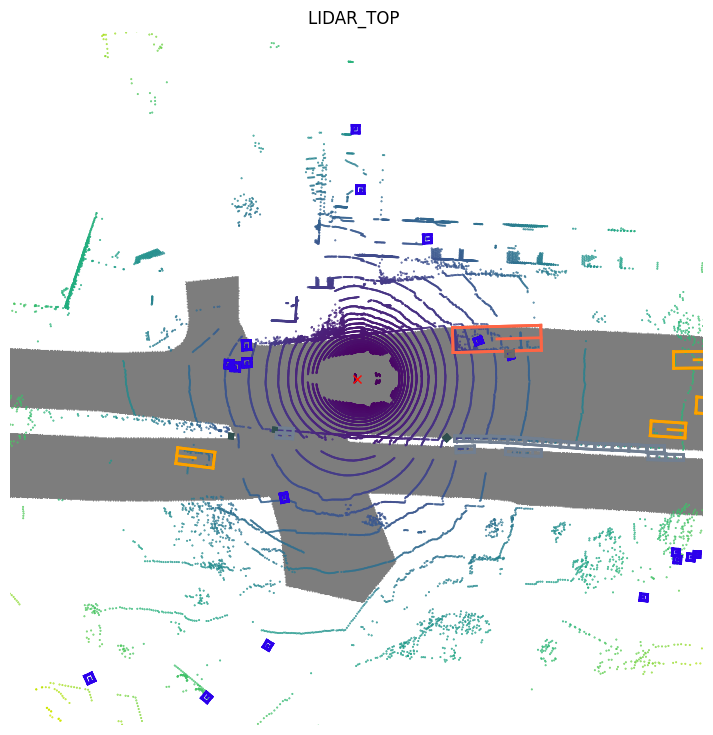

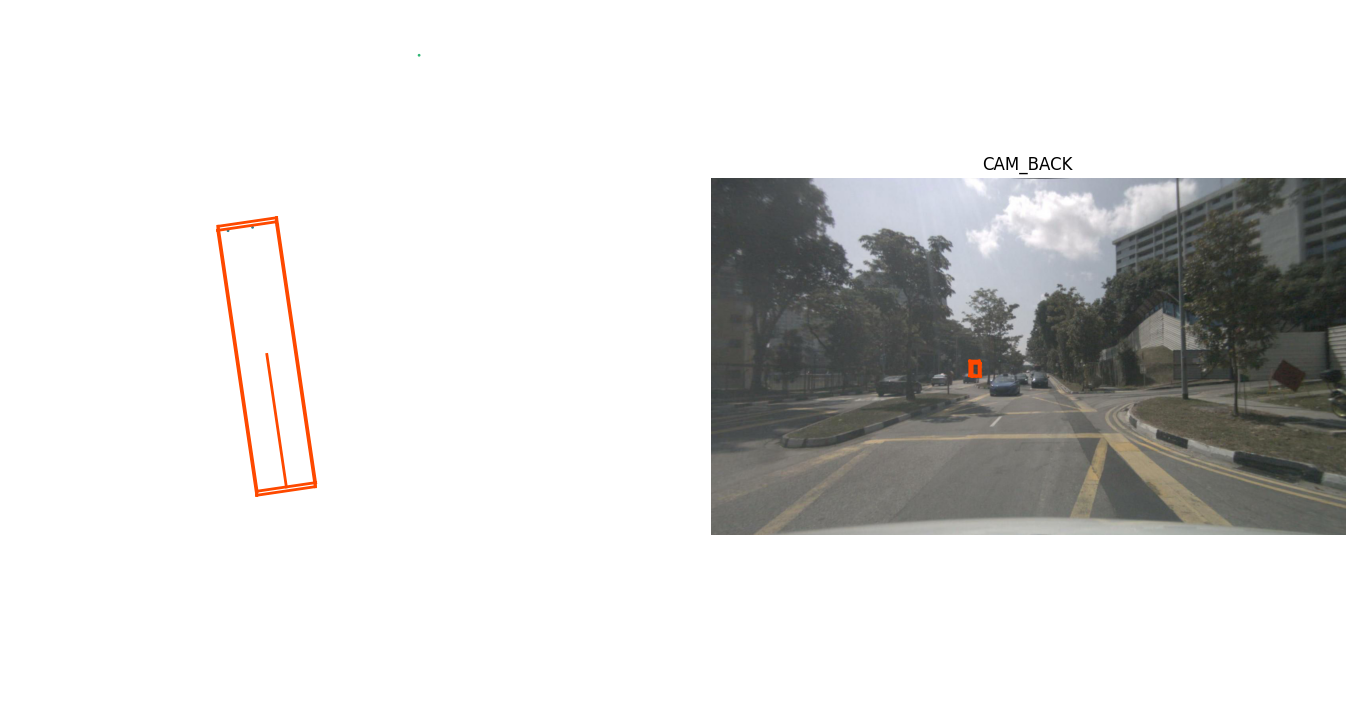

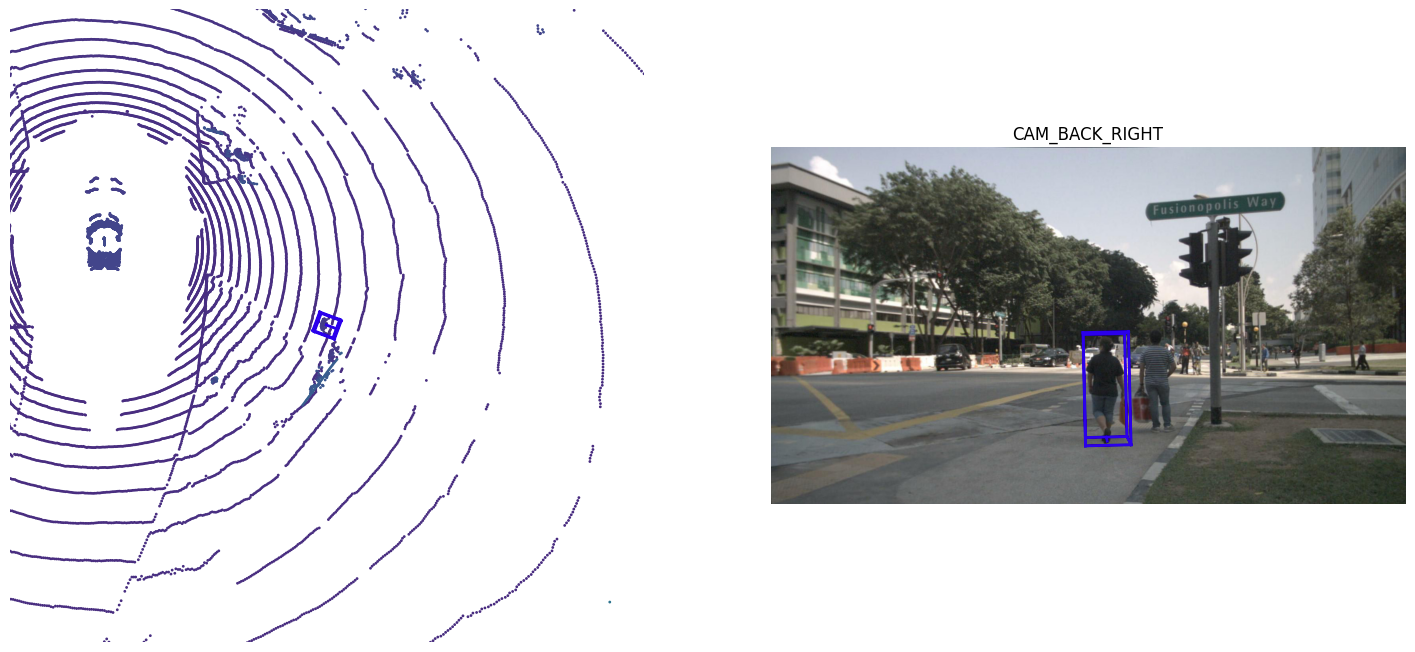

nusc.render_sample_data(lidar_top_data['token'])

这里只展示了 LIDAR_TOP ,即车顶的激光雷达的可视化结果,可视化其他传感器的方法也类似的。

样本注释(sample_annotation)

获取样本注释

my_annotation_token = my_sample['anns'][18]

my_annotation_metadata = nusc.get('sample_annotation', my_annotation_token)

my_annotation_metadata

# 输出结果

{'token': '83d881a6b3d94ef3a3bc3b585cc514f8',

'sample_token': 'ca9a282c9e77460f8360f564131a8af5',

'instance_token': 'e91afa15647c4c4994f19aeb302c7179',

'visibility_token': '4',

'attribute_tokens': ['58aa28b1c2a54dc88e169808c07331e3'],

'translation': [409.989, 1164.099, 1.623],

'size': [2.877, 10.201, 3.595],

'rotation': [-0.5828819500503033, 0.0, 0.0, 0.812556848660791],

'prev': '',

'next': 'f3721bdfd7ee4fd2a4f94874286df471',

'num_lidar_pts': 495,

'num_radar_pts': 13,

'category_name': 'vehicle.truck'}可视化展示数据:

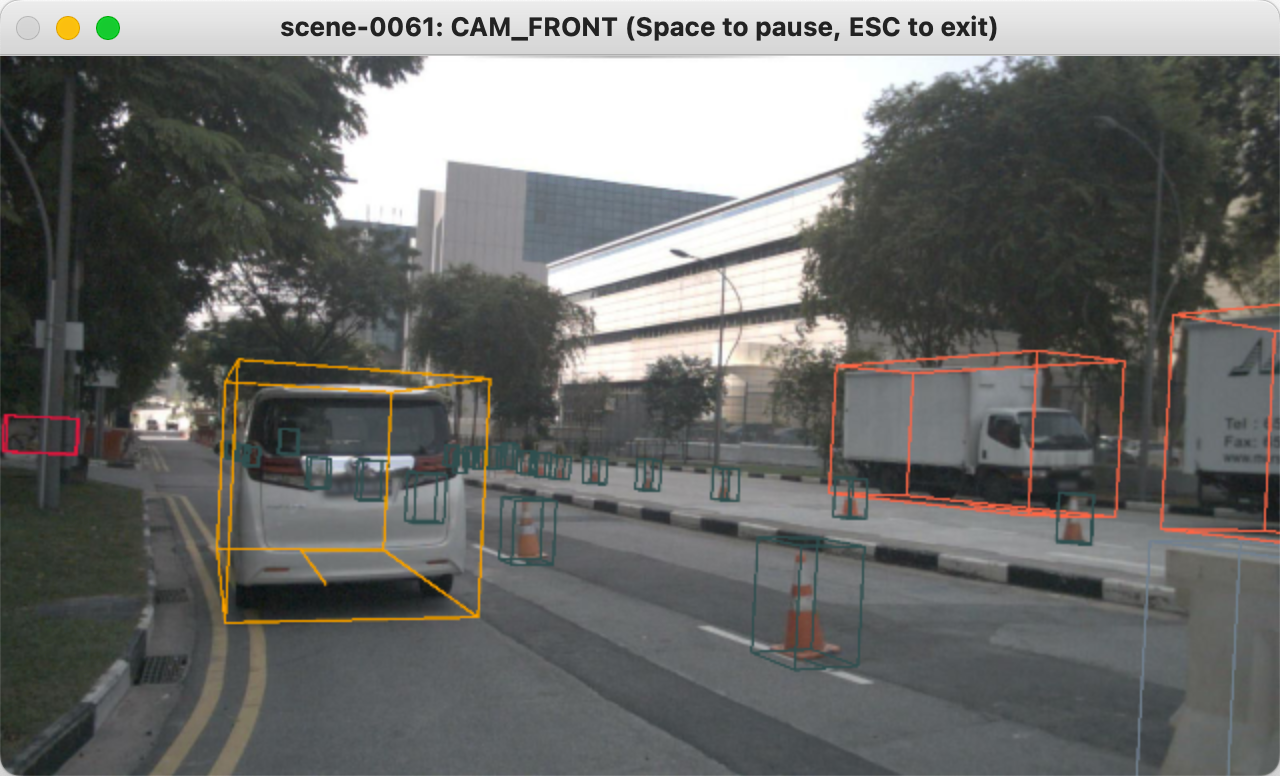

nusc.render_annotation(my_annotation_token)

实例(instance)

获取第599个实例

my_instance = nusc.instance[599]

my_instance

# 输出结果

{'token': '9cba9cd8af85487fb010652c90d845b5',

'category_token': 'fedb11688db84088883945752e480c2c',

'nbr_annotations': 16,

'first_annotation_token': '77afa772cb4a4e5c8a5a53f2019bdba0',

'last_annotation_token': '6fed6d902e5e487abb7444f62e1a2341'}可视化这个实例

instance_token = my_instance['token']

nusc.render_instance(instance_token)

可视化这个实例第一个注释

print("First annotated sample of this instance:")

nusc.render_annotation(my_instance['first_annotation_token'])

可视化这个实例最后一个注释

print("Last annotated sample of this instance")

nusc.render_annotation(my_instance['last_annotation_token'])

这里的注释可以理解为对这实例的追踪

类别(category)

获取类别

nusc.list_categories()

# 输出结果

Category stats for split v1.0-mini:

human.pedestrian.adult n= 4765, width= 0.68±0.11, len= 0.73±0.17, height= 1.76±0.12, lw_aspect= 1.08±0.23

human.pedestrian.child n= 46, width= 0.46±0.08, len= 0.45±0.09, height= 1.37±0.06, lw_aspect= 0.97±0.05

human.pedestrian.constructi n= 193, width= 0.69±0.07, len= 0.74±0.12, height= 1.78±0.05, lw_aspect= 1.07±0.16

human.pedestrian.personal_m n= 25, width= 0.83±0.00, len= 1.28±0.00, height= 1.87±0.00, lw_aspect= 1.55±0.00

human.pedestrian.police_off n= 11, width= 0.59±0.00, len= 0.47±0.00, height= 1.81±0.00, lw_aspect= 0.80±0.00

movable_object.barrier n= 2323, width= 2.32±0.49, len= 0.61±0.11, height= 1.06±0.10, lw_aspect= 0.28±0.09

movable_object.debris n= 13, width= 0.43±0.00, len= 1.43±0.00, height= 0.46±0.00, lw_aspect= 3.35±0.00

movable_object.pushable_pul n= 82, width= 0.51±0.06, len= 0.79±0.10, height= 1.04±0.20, lw_aspect= 1.55±0.18

movable_object.trafficcone n= 1378, width= 0.47±0.14, len= 0.45±0.07, height= 0.78±0.13, lw_aspect= 0.99±0.12

static_object.bicycle_rack n= 54, width= 2.67±1.46, len=10.09±6.19, height= 1.40±0.00, lw_aspect= 5.97±4.02

vehicle.bicycle n= 243, width= 0.64±0.12, len= 1.82±0.14, height= 1.39±0.34, lw_aspect= 2.94±0.41

vehicle.bus.bendy n= 57, width= 2.83±0.09, len= 9.23±0.33, height= 3.32±0.07, lw_aspect= 3.27±0.22

vehicle.bus.rigid n= 353, width= 2.95±0.26, len=11.46±1.79, height= 3.80±0.62, lw_aspect= 3.88±0.57

vehicle.car n= 7619, width= 1.92±0.16, len= 4.62±0.36, height= 1.69±0.21, lw_aspect= 2.41±0.18

vehicle.construction n= 196, width= 2.58±0.35, len= 5.57±1.57, height= 2.38±0.33, lw_aspect= 2.18±0.62

vehicle.motorcycle n= 471, width= 0.68±0.21, len= 1.95±0.38, height= 1.47±0.20, lw_aspect= 3.00±0.62

vehicle.trailer n= 60, width= 2.28±0.08, len=10.14±5.69, height= 3.71±0.27, lw_aspect= 4.37±2.41

vehicle.truck n= 649, width= 2.35±0.34, len= 6.50±1.56, height= 2.62±0.68, lw_aspect= 2.75±0.37获取第i个类别的信息

nusc.category[9]

# 输出结果

{'token': 'dfd26f200ade4d24b540184e16050022',

'name': 'vehicle.motorcycle',

'description': 'Gasoline or electric powered 2-wheeled vehicle designed to move rapidly (at the speed of standard cars) on the road surface. This category includes all motorcycles, vespas and scooters.'}属性(attribute)

获取属性

nusc.list_attributes()

# 输出结果

cycle.with_rider: 305

cycle.without_rider: 434

pedestrian.moving: 3875

pedestrian.sitting_lying_down: 111

pedestrian.standing: 1029

vehicle.moving: 2715

vehicle.parked: 4674

vehicle.stopped: 1545在一个场景中,实例的属性是会变的,下面展示行人的属性的变化情况:从移动变为站立

my_instance = nusc.instance[27]

first_token = my_instance['first_annotation_token']

last_token = my_instance['last_annotation_token']

nbr_samples = my_instance['nbr_annotations']

current_token = first_token

i = 0

found_change = False

while current_token != last_token:

current_ann = nusc.get('sample_annotation', current_token)

current_attr = nusc.get('attribute', current_ann['attribute_tokens'][0])['name']

if i == 0:

pass

elif current_attr != last_attr:

print("Changed from `{}` to `{}` at timestamp {} out of {} annotated timestamps".format(last_attr, current_attr, i, nbr_samples))

found_change = True

next_token = current_ann['next']

current_token = next_token

last_attr = current_attr

i += 1

# 输出结果

Changed from `pedestrian.moving` to `pedestrian.standing` at timestamp 21 out of 39 annotated timestamps可见度(visibility)

获取可见度分级

nusc.visibility

# 输出结果

[{'description': 'visibility of whole object is between 0 and 40%',

'token': '1',

'level': 'v0-40'},

{'description': 'visibility of whole object is between 40 and 60%',

'token': '2',

'level': 'v40-60'},

{'description': 'visibility of whole object is between 60 and 80%',

'token': '3',

'level': 'v60-80'},

{'description': 'visibility of whole object is between 80 and 100%',

'token': '4',

'level': 'v80-100'}]获取一个 v80-100 的样本注释

anntoken = 'a7d0722bce164f88adf03ada491ea0ba'

visibility_token = nusc.get('sample_annotation', anntoken)['visibility_token']

print("Visibility: {}".format(nusc.get('visibility', visibility_token)))

nusc.render_annotation(anntoken)Visibility: {'description': 'visibility of whole object is between 80 and 100%', 'token': '4', 'level': 'v80-100'}

传感器(sensor)

获取传感器

nusc.sensor

# 输出结果

[{'token': '725903f5b62f56118f4094b46a4470d8',

'channel': 'CAM_FRONT',

'modality': 'camera'},

{'token': 'ce89d4f3050b5892b33b3d328c5e82a3',

'channel': 'CAM_BACK',

'modality': 'camera'},

{'token': 'a89643a5de885c6486df2232dc954da2',

'channel': 'CAM_BACK_LEFT',

'modality': 'camera'},

{'token': 'ec4b5d41840a509984f7ec36419d4c09',

'channel': 'CAM_FRONT_LEFT',

'modality': 'camera'},

{'token': '2f7ad058f1ac5557bf321c7543758f43',

'channel': 'CAM_FRONT_RIGHT',

'modality': 'camera'},

{'token': 'ca7dba2ec9f95951bbe67246f7f2c3f7',

'channel': 'CAM_BACK_RIGHT',

'modality': 'camera'},

{'token': 'dc8b396651c05aedbb9cdaae573bb567',

'channel': 'LIDAR_TOP',

'modality': 'lidar'},

{'token': '47fcd48f71d75e0da5c8c1704a9bfe0a',

'channel': 'RADAR_FRONT',

'modality': 'radar'},

{'token': '232a6c4dc628532e81de1c57120876e9',

'channel': 'RADAR_FRONT_RIGHT',

'modality': 'radar'},

{'token': '1f69f87a4e175e5ba1d03e2e6d9bcd27',

'channel': 'RADAR_FRONT_LEFT',

'modality': 'radar'},

{'token': 'df2d5b8be7be55cca33c8c92384f2266',

'channel': 'RADAR_BACK_LEFT',

'modality': 'radar'},

{'token': '5c29dee2f70b528a817110173c2e71b9',

'channel': 'RADAR_BACK_RIGHT',

'modality': 'radar'}]因sample_data中就存储着传感器的信息,因此可以通过nusc.sample_data[i]来获取传感器的信息

nusc.sample_data[10]

# 输出结果

{'token': '2ecfec536d984fb491098c9db1404117',

'sample_token': '356d81f38dd9473ba590f39e266f54e5',

'ego_pose_token': '2ecfec536d984fb491098c9db1404117',

'calibrated_sensor_token': 'f4d2a6c281f34a7eb8bb033d82321f79',

'timestamp': 1532402928269133,

'fileformat': 'pcd',

'is_key_frame': False,

'height': 0,

'width': 0,

'filename': 'sweeps/RADAR_FRONT/n015-2018-07-24-11-22-45+0800__RADAR_FRONT__1532402928269133.pcd',

'prev': 'b933bbcb4ee84a7eae16e567301e1df2',

'next': '79ef24d1eba84f5abaeaf76655ef1036',

'sensor_modality': 'radar',

'channel': 'RADAR_FRONT'}校准传感器(calibrated sensor)

获取某传感器的校准信息

nusc.calibrated_sensor[0]

# 输出结果

{'token': 'f4d2a6c281f34a7eb8bb033d82321f79',

'sensor_token': '47fcd48f71d75e0da5c8c1704a9bfe0a',

'translation': [3.412, 0.0, 0.5],

'rotation': [0.9999984769132877, 0.0, 0.0, 0.0017453283658983088],

'camera_intrinsic': []}车辆姿态(ego_pose)

nusc.ego_pose[0]

# 输出结果

{'token': '5ace90b379af485b9dcb1584b01e7212',

'timestamp': 1532402927814384,

'rotation': [0.5731787718287827,

-0.0015811634307974854,

0.013859363182046986,

-0.8193116095230444],

'translation': [410.77878632230204, 1179.4673290964536, 0.0]}日志(log)

获取日志的数量

print("Number of `logs` in our loaded database: {}".format(len(nusc.log)))

# 输出结果

Number of `logs` in our loaded database: 8获取某个日志

nusc.log[0]

# 输出结果

{'token': '7e25a2c8ea1f41c5b0da1e69ecfa71a2',

'logfile': 'n015-2018-07-24-11-22-45+0800',

'vehicle': 'n015',

'date_captured': '2018-07-24',

'location': 'singapore-onenorth',

'map_token': '53992ee3023e5494b90c316c183be829'}地图(map)

获取地图的数量

print("There are {} maps masks in the loaded dataset".format(len(nusc.map)))

# 输出结果

There are 4 maps masks in the loaded dataset获取某个地图

nusc.map[0]

{'category': 'semantic_prior',

'token': '53992ee3023e5494b90c316c183be829',

'filename': 'maps/53992ee3023e5494b90c316c183be829.png',

'log_tokens': ['0986cb758b1d43fdaa051ab23d45582b',

'1c9b302455ff44a9a290c372b31aa3ce',

'e60234ec7c324789ac7c8441a5e49731',

'46123a03f41e4657adc82ed9ddbe0ba2',

'a5bb7f9dd1884f1ea0de299caefe7ef4',

'bc41a49366734ebf978d6a71981537dc',

'f8699afb7a2247e38549e4d250b4581b',

'd0450edaed4a46f898403f45fa9e5f0d',

'f38ef5a1e9c941aabb2155768670b92a',

'7e25a2c8ea1f41c5b0da1e69ecfa71a2',

'ddc03471df3e4c9bb9663629a4097743',

'31e9939f05c1485b88a8f68ad2cf9fa4',

'783683d957054175bda1b326453a13f4',

'343d984344e440c7952d1e403b572b2a',

'92af2609d31445e5a71b2d895376fed6',

'47620afea3c443f6a761e885273cb531',

'd31dc715d1c34b99bd5afb0e3aea26ed',

'34d0574ea8f340179c82162c6ac069bc',

'd7fd2bb9696d43af901326664e42340b',

'b5622d4dcb0d4549b813b3ffb96fbdc9',

'da04ae0b72024818a6219d8dd138ea4b',

'6b6513e6c8384cec88775cae30b78c0e',

'eda311bda86f4e54857b0554639d6426',

'cfe71bf0b5c54aed8f56d4feca9a7f59',

'ee155e99938a4c2698fed50fc5b5d16a',

'700b800c787842ba83493d9b2775234a'],

'mask': <nuscenes.utils.map_mask.MapMask at 0x7fb4c3e8a9b0>}进阶

播放传感器

播放其中一个传感器

my_scene_token = nusc.field2token('scene', 'name', 'scene-0061')[0]

nusc.render_scene_channel(my_scene_token, 'CAM_FRONT')

播放所有传感器

nusc.render_scene(my_scene_token)

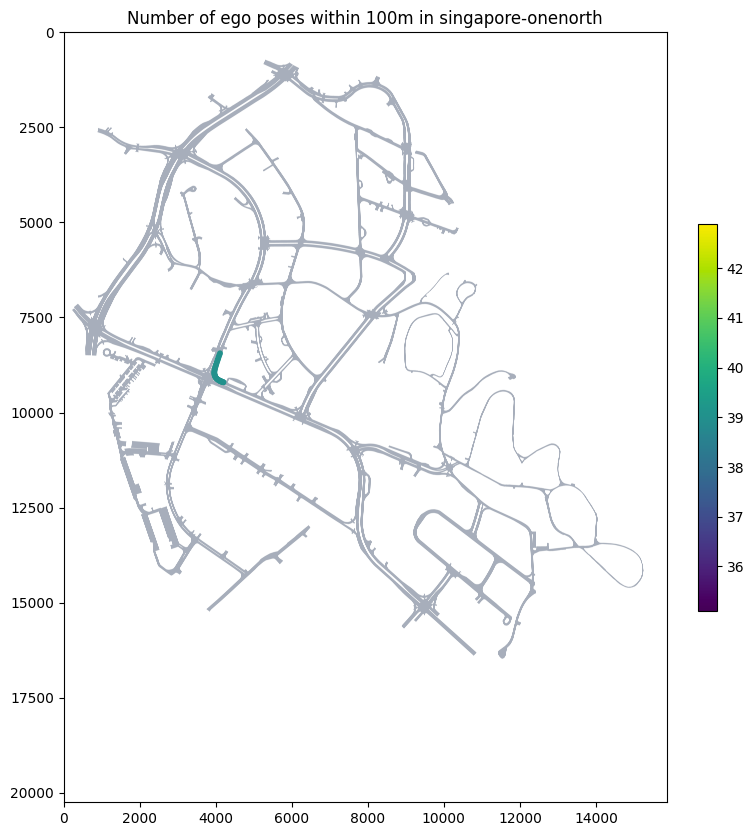

绘制车辆行驶地图

nusc.render_egoposes_on_map(log_location='singapore-onenorth')

# 输出结果

Adding ego poses to map...

100%|█████████████████████████████████████████████| 1/1 [00:00<00:00, 37.87it/s]

Creating plot...

参考

- https://www.nuscenes.org/nuscenes

- https://github.com/nutonomy/nuscenes-devkit/tree/master/docs

- https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/nuscenes_tutorial.ipynb#scrollTo=oCLrP5f9cMOq

- https://zhuanlan.zhihu.com/p/549764492

本文由 Chakhsu Lau 创作,采用 知识共享署名4.0 国际许可协议进行许可。

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名。

ejaxgv31567UR-企业建站!收录请看过来!http://www.jithendriyasujith.com//